1: 任务介绍和准备工作

爬取豆瓣电影Top250的基本信息。

https://movie.douban.com/top250

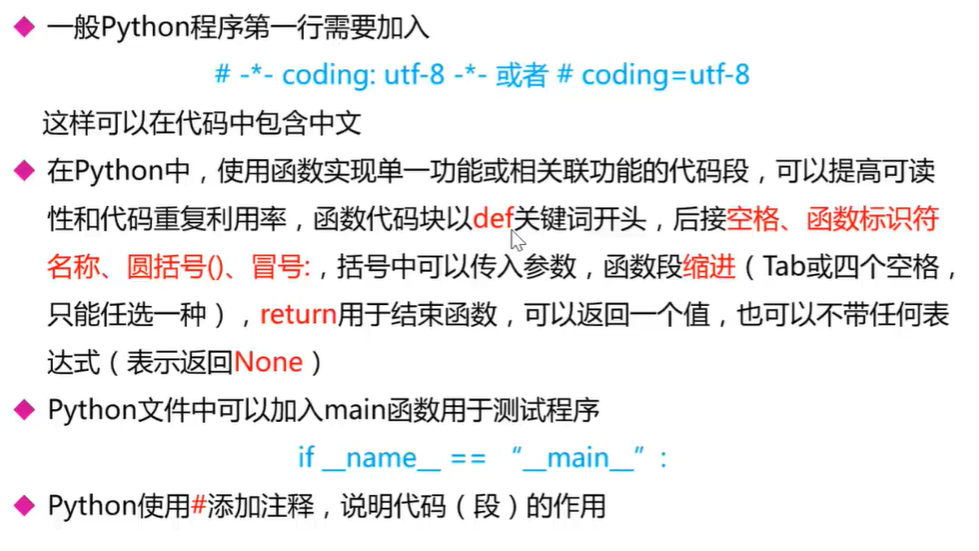

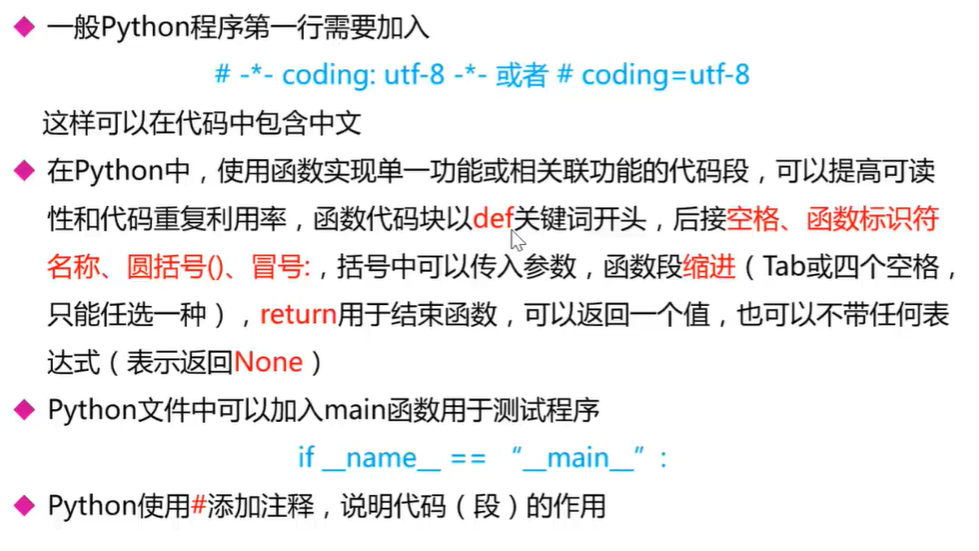

编码规范:

1

2

3

4

5

6

7

|

def hello():

print("hello")

if __name__ = "__main__":

hello()

|

引入模块:

目录结构:

- test1

1

2

3

4

5

6

| ```

\- t1.py

\- test2

|

1

2

3

4

5

6

7

8

9

|

\- t2.py

t1.py

```python

def add(a,b):

return a+b

print(add(a,b))

|

在t2.py中引入,t1.py

1

2

| from test1 import t1

print(t1.add(3,5))

|

引入第三方模块

1

2

3

4

5

| from bs4 import BeautifulSoup

import re

import urllib.request,urllib.error

import xlwt

import sqlite3

|

2:构建流程

2.1 基本框架

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| def main():

baseurl = "https://movie.douban.com/top250?start="

datalist = getData(baseurl)

savepath = ".\\豆瓣电影Top250"

saveData(savepath)

def getData(baseurl):

datalist = []

return datalist

def saveData(savepath):

|

2.2 获取数据

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| def askURL(url):

head = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.83 Safari/537.36"}

req = urllib.request.Request(url,headers=head)

html = ""

try:

response = urllib.request.urlospen(req)

html = response.read().decode("utf-8")

print(html)

except urllib.error.URLError as e:

if hasattr(e,"code"):s

print(e.code)

if hasattr(e,"reason"):

print(e.reason)

def getData(baseurl):

datalist = []

for i in range(0,10):

url = baseurl + str(i*25)

html = askURL(url)

return datalist

|

3:补充Urllib库

get请求

1

2

3

4

| import urllib.request

response = urllib.request.urlopen("https://www.baidu.com")

print(response.read().decode('utf-8'))

|

Post请求

1

2

3

4

5

6

| import urllib.request

import urllib,parse

data = bytes(urllib.parse.urlencode({"hello":"world"}),encoding="utf-8")

response = urlib.request.urlopen("http://httpbin.org/post",data = data)

print(response.read().decode("utf-8"))

|

超时处理:0.01秒内无反应

1

2

3

4

5

| try:

response = urlib.request.urlopen("http://httpbin.org/get",timeout = 0.01)

print(response.read().decode("utf-8"))

except urllib.error.URLError as e:

print("time out")

|

响应内容:

1

2

3

| response.status

response.getheader()

response.getheader("Server")

|

伪装:headers内容可以在访问网站时查看,request header

1

2

3

4

5

6

| url = "https://www.douban.com"

headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.83 Safari/537.36"}

data = bytes(urllib.parse.urlencode({"name":"eric"}),encoding="utf-8")

req = urllib.request.Request(url=url,data=data,headers=headers,method=method)

response = urllib.request.urlopen(req)

|

4:补充BeautifSoup库

将复杂的html文档转化为树形文档

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| from bs4 import BeautifulSoup

file = open("./baidu.html","rb")

html = file.read()

bs = BeautifulSoup(html,"html.parser")

print(bs.title)

print(bs.title.string)

print(bs.a.attrs)

t_lists = bs.find_all("a")

t_lists = bs.find_all(re.compile("a"))

t_lists = bs.find_all(class="head")

t_lists = bs.find_all(class_=True)

t_lists = bs.find_all(text = re.compile("\d"))

t_lists = bs.find_all("a",limit=3)

bs.select('title')

bs.select(".mnav")

bs.select("#u1")

bs.select("a[class='bri']")

bs.select("head>title")

bs.select(".mnv ~ .bri")

|

更多内容:https://beautifulsoup.readthedocs.io/zh_CN/v4.4.0/

5:补充正则表达式